Continued from: Part 2 – Enumerate Ranges and First/Last

This is really the major piece of work in this release. There are a number of factors as to why these methods were added, but the foremost among them is performance when merging two sets of data. Before we go further let’s look at a typical scenario where we just fetched 1000 records from the server and need to insert them into our local cache. For this example let’s assume our records are 256 bytes, giving us a b+tree order of 32 values per leaf to fill 8kb blocks. This data is key’ed by it’s parent ID + name so the key space we are going to write is very dense. The thing I started to wonder is how much wasted thrashing is going on while enumerating and inserting densely populated keys. Basically we go find a node, rewrite that node, get the next item, find the same node we just had, rewrite it again, and so on. Let’s do a little math and find out how bad is this?

So how many read/write operations are there inserting these 1000 nodes one at a time (btree order 32)?

Would you believe around 1200! It should be obvious it’s over 1000, where are the other 200+ coming from? Splits. Each time we need to insert into a full node we have to split it, that requires rewriting that node, it’s parent, and it’s new sibling. This means that our 68 node tree has written 3×68 times just in splits.

AddRange

So what if we could fill a node before writing it the first time?

Obviously this requires a precondition, the input must be sorted. Once that precondition is met though we can now insert the entire set just as before except we will completely fill a node prior to writing it (if possible). Of course not every key can be placed in any node, thus to gain from this not only will the input need to be sorted, but the keys need to be dense enough that the optimization will help. As an example inserting 100 random GUIDs into a btree of 100,000 GUIDs will not likely see any gain whereas inserting 100 random GUIDs into a btree with 100 items will.

So how many read/write operations are there using this method on these 1000 pre-ordered nodes (btree order 32)?

Only 270+ since that is the same 200+ splits with the addition of another write for each of the 63 leaf nodes. We just gained nearly an order of magnitude. Thus the AddRange methods where born. They use these mechanics and typically see a 5 to 10x improved speed for the insert.

BulkInsert

But wait, we can do better! What if we did not need to split the nodes during write, can we just fill and move on and write in O(n) time? I mean if we can write all 68 nodes once that’s a far sight better than 270+ writes, further we can even reduce the number of nodes needed by leaving them all full.

So how many read/write operations are there using this method on these 1000 pre-ordered nodes (btree order 32)?

Just 33 (assuming it’s currently empty). That means we gain another order of magnitude over AddRange, but what about the existing contents? Well it needs to be entirely read and rewritten back to disk for this to work, and again our input needs to be sorted. Luckily our existing content is already sorted so we can merge it with the input in O(n) time, that is the easy part. There is some added overhead since we will need to rewrite the root and delete all the ‘old’ data, but it’s still O(n) insertion time, where n is the number of items in the b+tree plus the number being inserted.

The other nice thing about this approach is that by rewriting the tree we can use a transaction to swap the root node. This allows an all-or-nothing insert that was previously not possible. In future versions this may be used for replacing or removing ranges of data.

Confirming our Performance SWAG

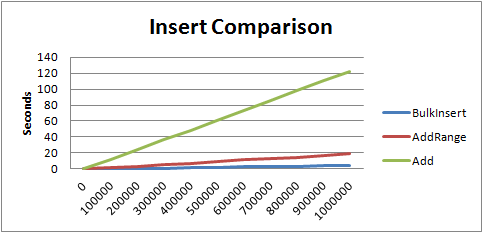

The following graph displays the same data inserted via the various options above:

I’m pleased to see the chart so clearly back up the math. The order of magnitude between variations is not just theory any more ;) The insertion times graphed above are 3 seconds for BulkInsert, 18 seconds for AddRange, and 122 for Add.

*Note: in the real-world case of sparse key inserted to existing data the results will vary widely.

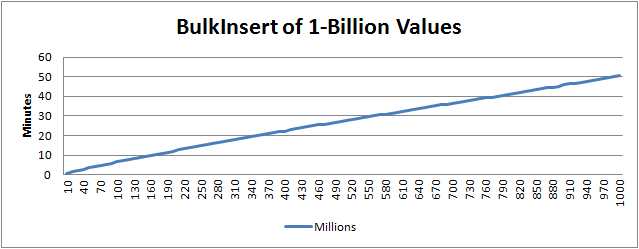

BulkInsert a Billion Rows…

Sure we can do that in under an hour :)

Why would you insert a billion rows? Just because we can. Does anyone know how long inserting a billion rows into SQL Server with Bulk Import takes?

Update: The preceding times were measured with the original file format. The v2 format is much faster that represented here. Additionally I’ve tested up to Int32.MaxValue (2,147,483,647) rows with no issues and linear performance. Keep reading the following articles for more information on the v2 storage format.

Presorting Input

Given the above methods both depend upon presorted data we need to address this. The only complication is that we could be inserting more data than can reasonable be placed in memory. Enter the OrderedEnumeration<T> class. This class takes an enumeration, breaks it into ‘pages’ of data that can fit in memory, sorts it, and if needed stores the result on disk. Finally the class then aggregates all pages into a single ordered enumeration. The whole thing is done in O(n log(n)) time, and given that the disk access is sequential, this can be done much faster than directly inserting the data. I stopped testing at 100 million rows and found no measurable difference between presorted and unsorted input.

Usage Notes:

AddRange will always sort the data. Use AddRangeSorted if the data is already sorted by key. AddRange may also be used to overwrite existing data by providing true for the optional argument ‘allowUpdates’. AddRange is thread-safe and allows concurrent writers to exist in the tree.

AddRangeSorted will assume the input enumeration is already ordered. If the data is out of order, no harm will come; however, it may be no more optimal than just calling Add() for each item. AddRangeSorted is thread-safe and allows concurrent writers to exist in the tree.

BulkInsert will by default sort the input enumeration. Use the overload that accepts a BulkInsertOptions structure to specify the input as presorted if needed. Warning: if the input is not actually sorted and the options provided declare it as being sorted, the resulting structure will be corrupt (or an exception may be thrown). BulkInsert is thread-safe and is a write exclusive operation on the tree; however, it does allow concurrent readers to still read the previous state while the tree is being rewritten. BulkInsert is also a transacted operation, either all records will be added/updated or none but no incomplete results are possible.

Next up BPlusTree 2.0 Changes Part 4 – Rewriting the Storage Format